NASA GVIS

Science in Mixed Reality 🔭

Full Stack Dev, UX Designer

June 2020 - August 2020

NASA GVIS Lab Members (~10)

Overview

Throughout the summer of 2020, I contributed to a variety of projects and prospective technologies at GVIS that allow for more powerful visualizations at NASA.

Not only was I able to work with the development of MR projects, but I also explored the possibilities of remote experiences and mobile applications: factors that have become more essential and relevant to graphics work due to the COVID-19 pandemic.

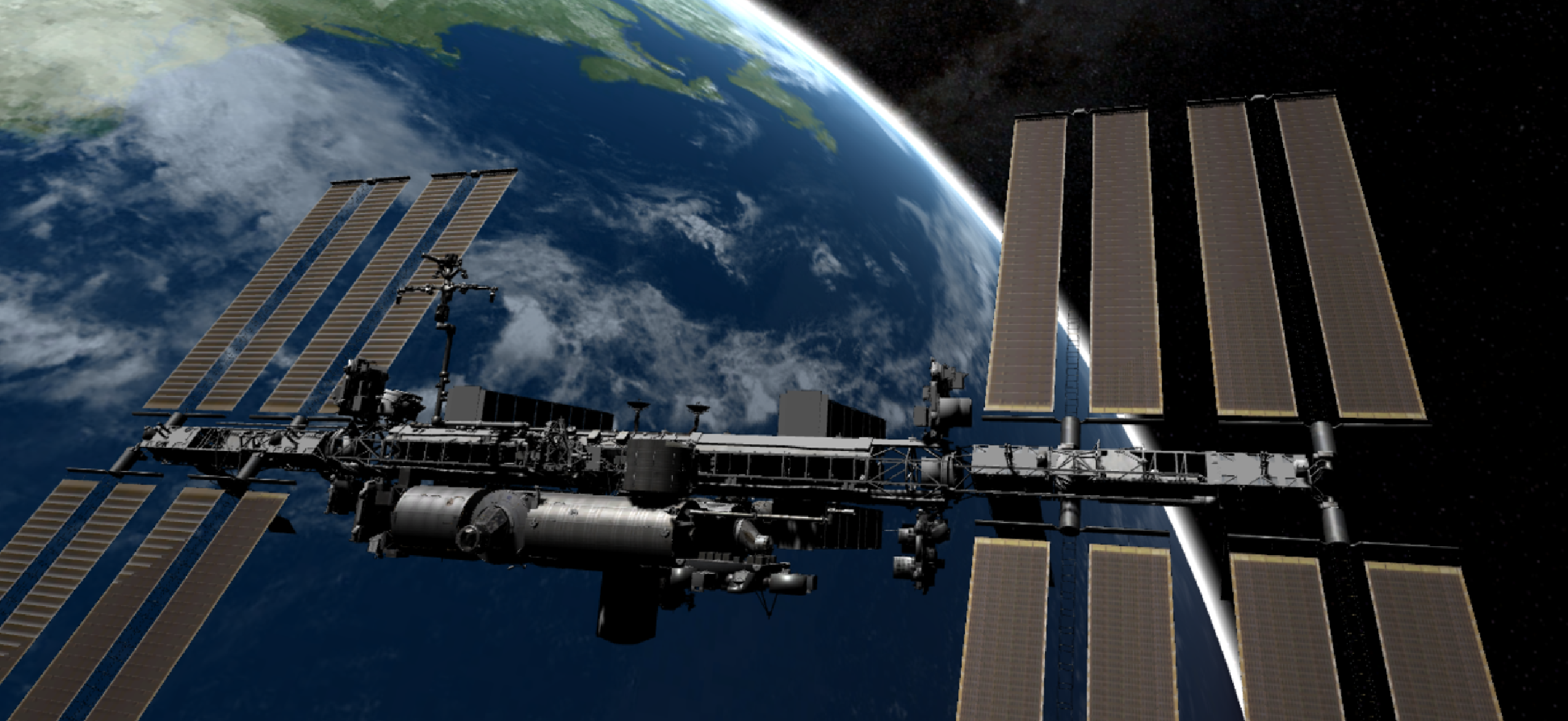

ISS

Interactive International Space Station Visualization

The International Space Station (ISS) visualization project allowed me to experiment with natural user interface techniques while developing a practical application. I optimized the application for the Oculus Quest device, which supports hand tracking via infrared sensing. This facilitates the development of applications that support the use of handheld controllers and controller-less hand tracking.

I went through an iterative process at this stage, initially designing the environment as a room in which the user views a model of the ISS. However, I eventually decided that viewing a life-sized model of the ISS in orbit would be a more informative and visually appealing experience.

To achieve realistic lighting, I applied a constant rotation on the Earth to give the viewer the illusion that the ISS was orbiting, as well as a constant rotation on the scene’s light source to mimic the lighting shifts observed by Earth’s rotation. These changes resulted in a scene that was both convincing and immersive.

I decided to add functionality that allowed for the user to highlight certain components of the ISS preview model by tapping on the component names in a scrollable list in the hand menu. In addition, I added functionality to the labels so that, as the ISS model rotates in any which direction, the labels would still face the user and remain legible.

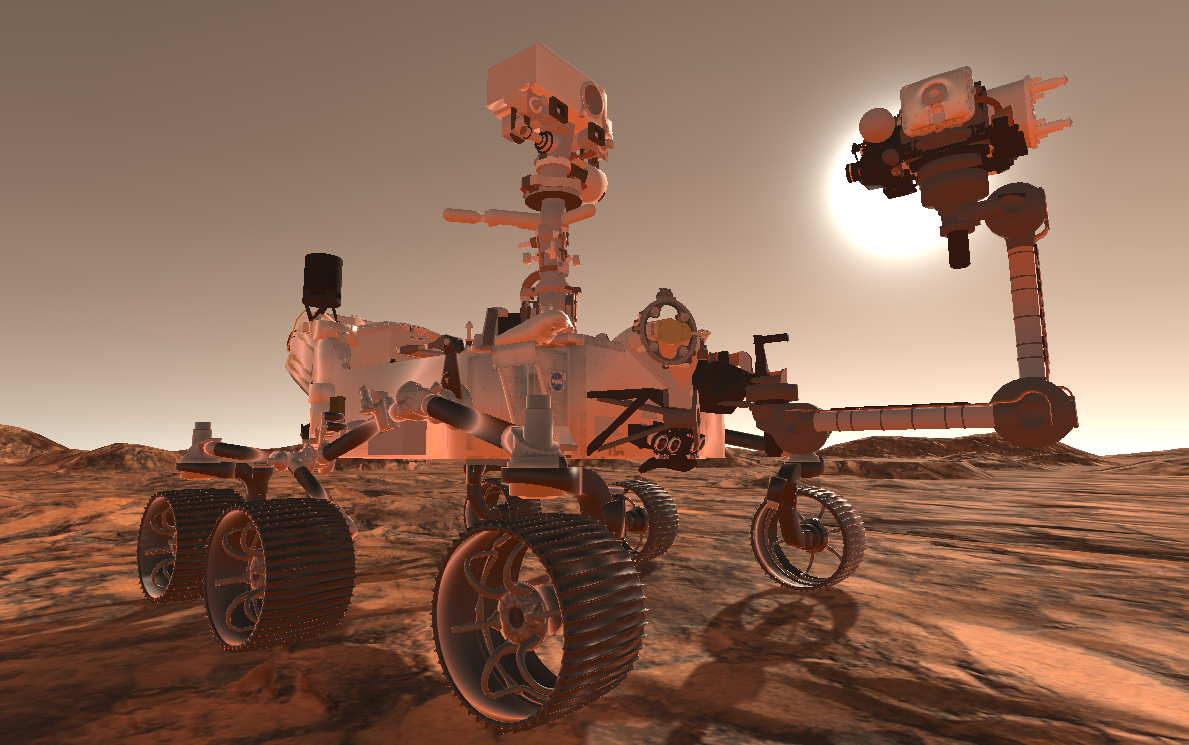

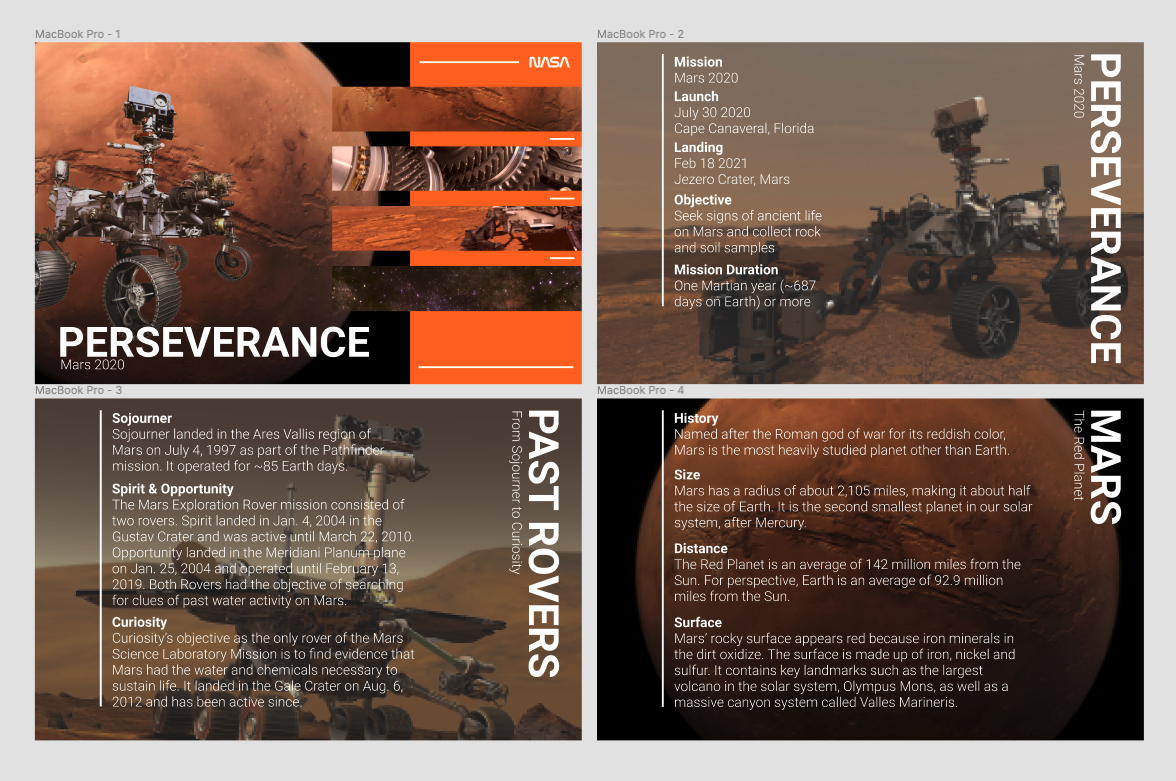

Mars Rover

Exploring the Perseverance Rover

Visuals

With the recent launch of the Perseverance rover, an interactive experience with this new technology would be informative. Taking a similar approach as with the ISS demo, I created an environment representing the surface of Mars using Unity’s terrain editor.

Interaction

Next steps in this project involved the interactivity itself: what would users do in this environment? I looked to other successful VR experiences and decided to start the scene with the user facing an interactive menu explaining what Perseverance is, rover history, information on Mars, etc. The menu allows users to select any of these tabs in the menu, which will show them the relevant information.

They can then teleport around the scene and view the rover from various angles. I also wanted to implement interactivity with individual pieces of the rover model. That is, a user can grab various pieces of the rover, which would make their names and descriptions appear beside them. The user could then let go of the piece to have it “snap back” to its correct position on the rover, providing a hands-on educational experience.

We created a mocap suit with radio-dense beads that could be tracked using XROMM tracking software. We then set up 3 GoPro cameras to capture the motion from three different viewpoints. The idea was to capture the motion data from various kicks, track them, export the 3D transformations, and import them as animations in Unity.

Other Projects

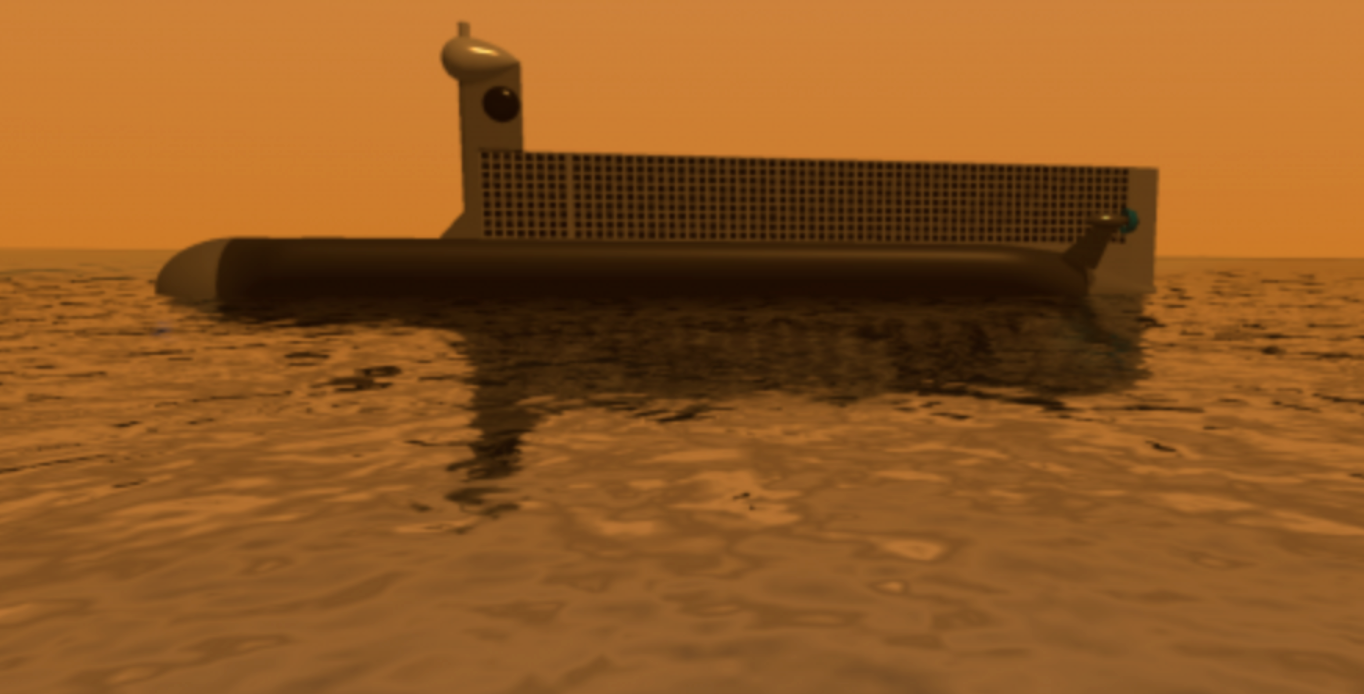

While working at the GVIS lab, I also worked on an experimental project in VR navigation in which a user could move their hands in a "swimming" motion to explore the liquid bodies on the surface of Titan, Saturn's biggest moon.

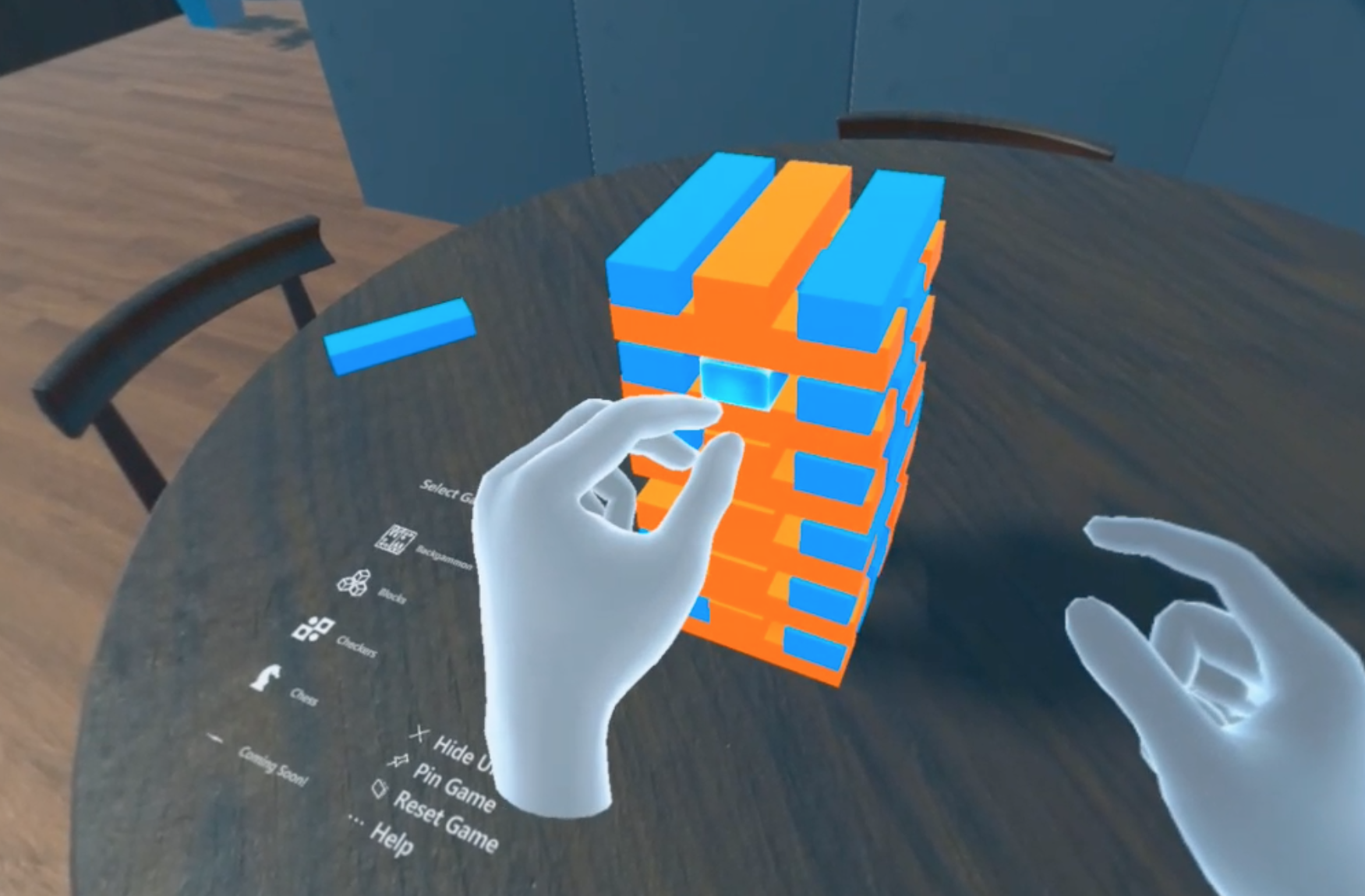

I also created a side project called Bored Games VR, a prototype allowing users to play board games together with nothing but a VR headset and their hands! Of course, the application supports standard VR controllers, but it was built with a focus on allowing users to interact with games in the most natural way, in an environment where they can play remotely with other friends in VR using realtime multiplayer.

The app allows users to change the current game with a minimal menu on the tabletop. Users can also emote by using hand gestures. That is, the application can recognize hand gestures such as a "thumbs up" to render the associated emoticon(s).

Reflection

Working at the GVIS lab was an incredible experience, where I got to learn from many extremely intelligent research and development experts.

This set of projects allowed me to tackle problems I'd never think I would solve, but my mentor helped me learn and grow to make sure I could make an impact at NASA.

10/10 would do again