NASA Ames - MarsVR

The next generation of Mars exploration 🚀

UX Designer, Full Stack Dev

Aug 2020 - Dec 2020

Disclaimer: This case study is very text heavy!

Overview

NASA Ames Research Center (ARC) specializes in cutting edge research and development to further progress NASA’s mission of enhancing knowledge and education via novel advances in various disciplines.

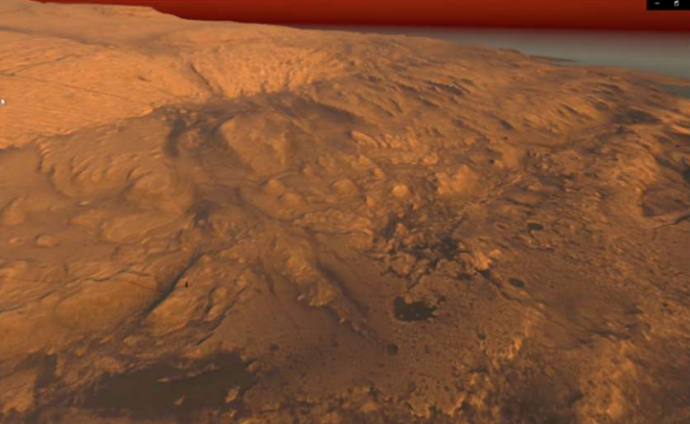

My project at NASA ARC was to support development of an interactive toolkit for observing, interacting with, and assessing Mars data as relayed by the Mars Science Laboratory (MSL). By directly referencing observations from the MSL database, we generated an accurate 3D representation of Mars, as charted by the Curiosity rover, that a user may directly interact with.

Key Interactions

Goals for the app

- Generate an accurate and traversable martian surface with NavCam image data.

- Allow user to enter "fly" mode to gain bird's eye view of Martian terrain, marked with key UI labels

- Create a data product sorting system allowing users to browse and view all MastCam images to date

- Generate camera cones to visualize the rover’s point of view when capturing images

- Allow user to select points on the martian surface and visualize a plot of its chemical composition via ChemCam data

Implementation

Creating a way to explore MSL data

Generating the Terrain

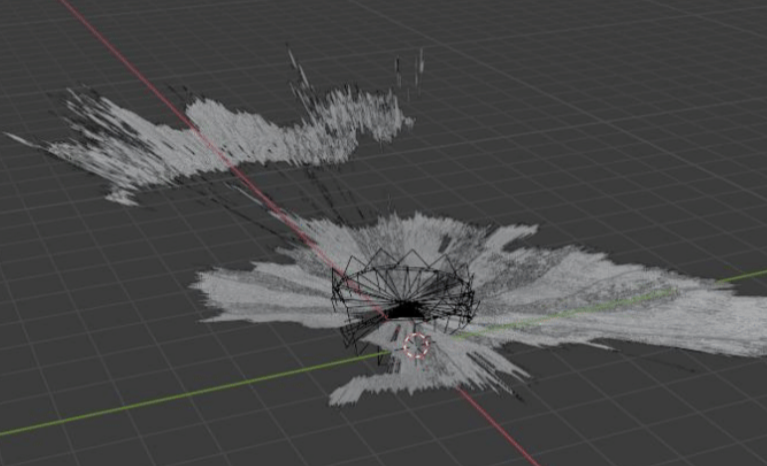

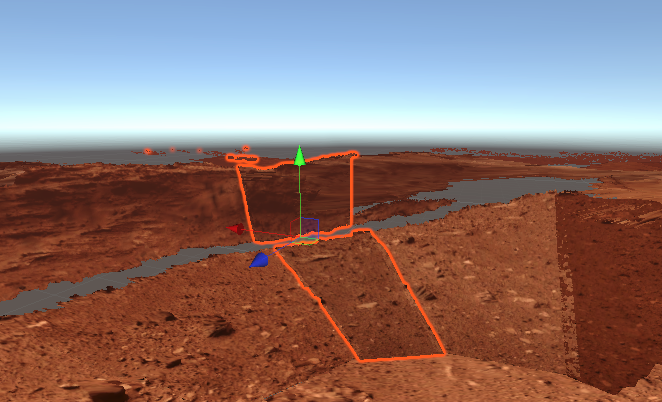

The other interns on my team did some really great work on the Navigation Camera (NavCam) data, which they used to create 3D terrains of the martian soil. This is the core of the environment for the VR app, and the user can walk around on these terrains in VR. They are also important for generating 3D camera cones for visualizing where in space the rover was relative to the terrain when taking images.

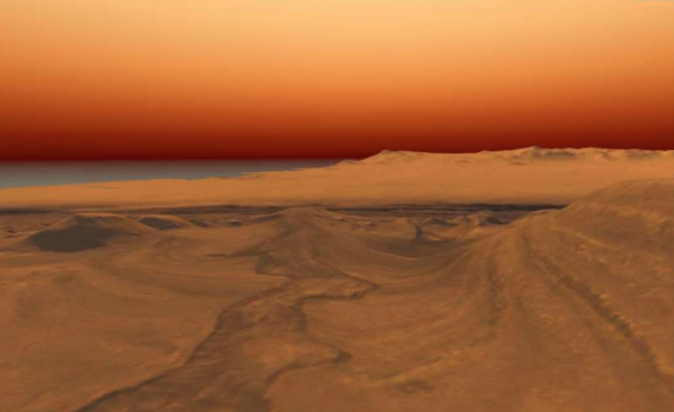

The terrains were generated by using Python to import the NavCam coordinate and depth data, which were used to generate meshes in the Blender 3D engine. These meshes were then colorized in an ochre palette to convey an accurate martian environment.

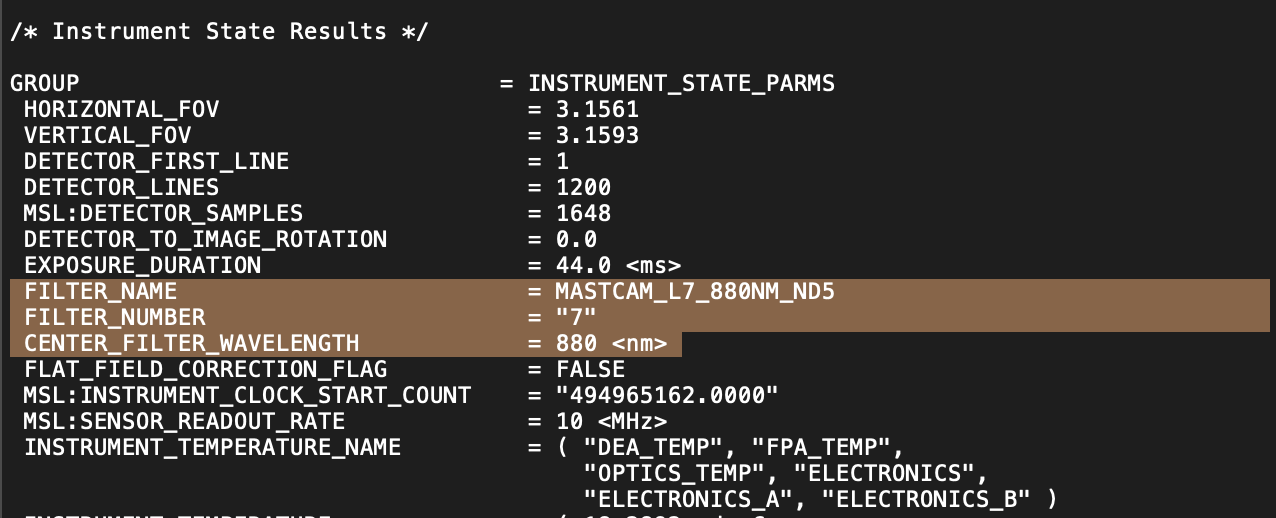

MastCam Image Sorting Engine

Most of my work involved MSL’s MastCam, which produces images of Martian scenery. These images are associated with PDS labels, which are basically files with extensive data for each image. To display the thousands of available images properly, I had to query the MSL library and automate an engine for parsing data from these PDS labels and presenting them to the user graphically.

In order to do this, I had to generate a CSV file with the data points we care about from all of the labels, meaning my engine had to parse every label one by one, extract the necessary data, and insert it into a larger lookup table that I would then query to get information for the user interface. All of this data was extracted by querying the appropriate MSL database URLs directly.

Loading the images into the scene required a similar process, where the engine queried the URL for the image file associated with a specific observation product ID, instead of the PDS label. Jargon aside, this means that I was able to get the data and images for each observation from the MSL database correctly, which I then presented in the graphical user interface for the user to see.

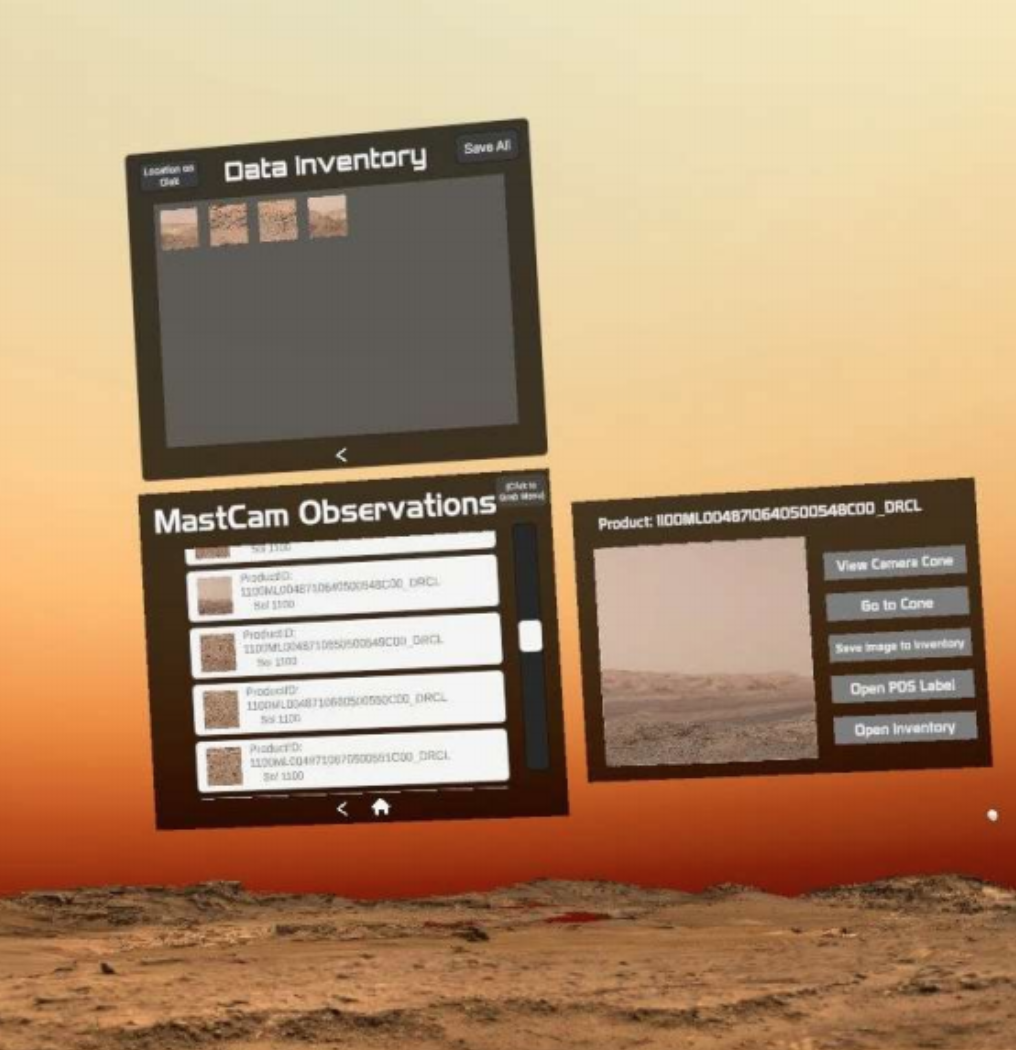

So now that I was able to present images and data, the bigger underlying issue was data sorting. There are hundreds of thousands of data points in the PDS labels for each sol (each sol being a day on mars), and we had to figure out how to allow users to sort through it. This meant making a menu where the user can select on a sol, and will then be taken to a menu for all the sequences of images for that day.

Then, they could select a sequence, and see the individual observations in that given sequence. Designing the implementation for this was actually more complex than one would think, and required a lot of iteration before I was able to come up with a solution that worked smoothly by storing values in dictionary data structures.

When one of the specific observations in the observation list is selected, the engine displays the correct image to the user in a preview window and then gives them options to view its corresponding camera cone, or add it to their image gallery, which can then be downloaded to the user’s directory of interest.

UX Aids

Because a lot of the complex data can be intimidating or verbose for more casual users, we included certain aids to help the user in their experience of MarsVR.

The first of these was a 3D model of the Curiosity rover, which could be used to help users see the instruments being used to collect the data and understand how they work via modal windows or hands-on interaction.

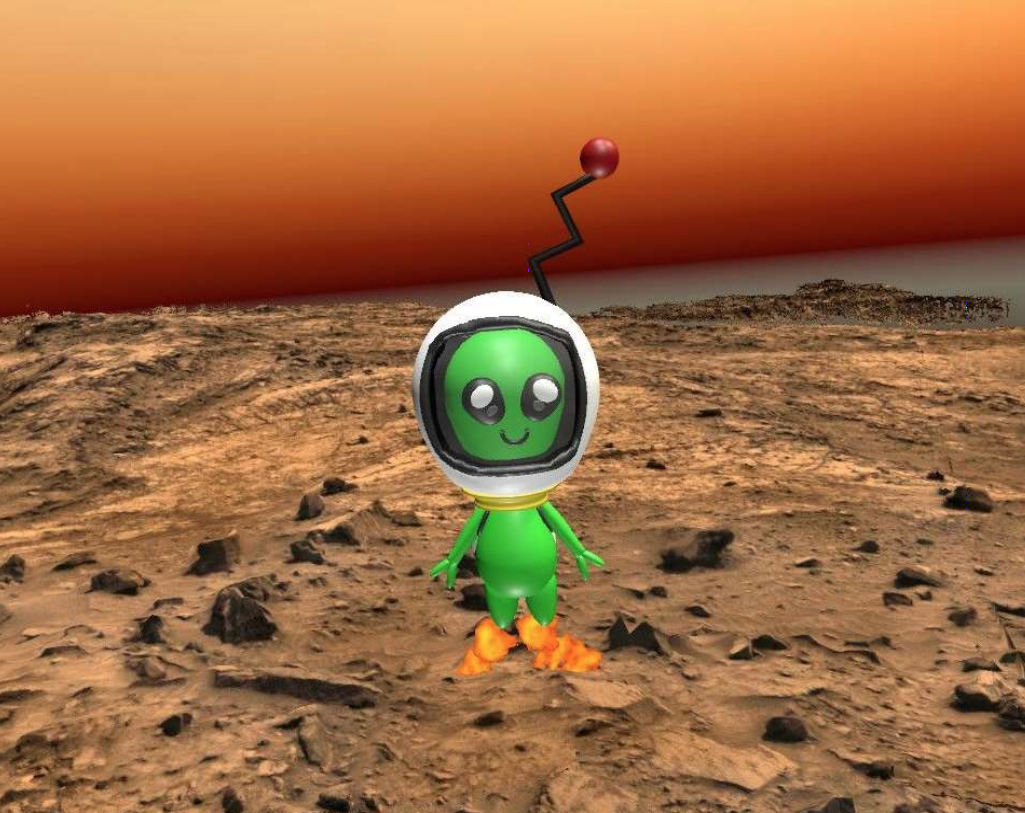

The second of these aids was the "Mars Buddy," a small green martian character designed to walk users through the UI and provide FAQ support. Users can even set the buddy to follow them around the martian surface, or can even play catch with it!

Future Work

As MarsVR is a growing app still in its infant stages, there is a lot more functionality planned for future iterations. Future versions will incorporate additional MSL datasets, as well as new data from the Mars 2020 Perseverance Rover, which landed on Mars on February 18, 2021.

Future Features:

- Automated camera cone generation based on PDS label coordinate data

- ChemCam data viewing Convey the difference to these modes, and

their capabilities, to the user in a natural way.

- Allow users to select any point on Mars terrain

- View plot of chemical composition for that point

- Visualization and analysis of other MSL datatypes (ChemCam, DAN, CheMin, APXS, and MAHLI)

- A virtual tour of Mars led by "Mars buddy," complete with voiceover

Working with the MSL datasets was a very challenging but fun experience that taught me a great deal about showing users complex data in comprehensive ways.